While working on my newest Pluralsight course this past Fall, I decided I wanted an easier way to create virtual machines on my local home network. Sure, it’s easy enough to spin up a few VMs on my iMac, but at some point the limitations of 16 gb of RAM and 4 processor cores was going to catch up with me. For this course in particular, I wanted to be able to run a half dozen or more VMs simultaneously to simulate a real corporate network with all or most components of the Elastic Stack running on it. What I really needed was a cheap virtual machine host with a lot of CPU cores and RAM and some reasonably fast disks. Before getting started on my course, I set out to build a server exclusively for the purpose of serving virtual machines on my network.

The centerpiece of the server build is the Intel Xeon E5-2670 CPU. The 2670 was released in 2012 with 8 cores (16 with Hyperthreading), and a full 20 mb L3 cache. By any measure it was a 64 bit x86 workhorse. Originally 2670s were priced at around $1,500 each which is far above the ~$1000 price tag I was hoping to keep the cost of this server under. As of this writing, however, you can find them on eBay for around $80. If you combine two of them, you end up with 32 logical cores which is an awful lot to spread around for virtual machines.

I found a used two-pack of the CPUs for $168 on eBay and paired them with a new ASRock EP2C602 dual socket motherboard which I got for $293, also on eBay. The EP2C602 is an older model board and works with DDR3 memory. This is slower than quad band DDR4 RAM but the DDR3 board and RAM parts were a lot less expensive. In fact I found a used kit of 64 GB ECC RAM for $105. Again, I wasn’t really interested in raw speed. I needed bandwidth in terms of RAM size and total logical CPU cores so I could run a lot of VMs simultaneously.

For the rest of the parts, I went directly with Amazon. To supply power I got an EVGA Supernova G2 750 watt supply. The important part to know here is that this power supply has two CPU connections which are needed for a dual socket motherboard. Make sure if you are doing a build like this and use a dual socket board that your power supply can properly power both CPUs. The processors are cooled with two Cooler Master hyper 212 fan/heat sink setups.

For the disks, I knew I wanted to use an SSD for the operating system, but for VM storage I wanted a RAID 0 striping setup with cheap disks to get as much bang for my buck as possible. I settled on two Western Digital 1TB 7200 RPM drives that I planned to set up in parallel for a total of 2 TB of space. That would be plenty of breathing room and striping meant they’d perform twice as fast (even though they’d now be half as reliable if one failed). For the OS drive I went with a basic Kingspec 64GB SSD since I knew this drive would only host the operating system. A run of the mill Asus DVD-RW SATA optical drive rounds out the internals.

All of this is housed inside a Phanteks Enthoo Pro full tower case which has plenty of room for the extra large motherboard, drives, and cooling. Importantly, this case supports the SSI EEB motherboard form factor which is a 12″ by 13″ board. That’s the size of the ASRock dual socket board I mentioned earlier and I wanted to make sure it would fit in the case I bought.

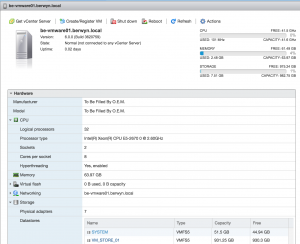

The research I put into all these parts paid off because they all went together perfectly. I chose to use VMWare ESXi version 6.5 as my host OS since that’s what we use at work and I know it pretty well. The free version of ESXi does not include some of the features you get with vSphere like cloning and external storage, but for my needs it would be perfectly fine. Other good options to use would be Citrix Xenserver or Microsoft Hyper-V. (I may rebuild this machine at some point in the future to fiddle with one or both of those but to date I’ve stuck with VMWare)

I _did_ run into one problem. VMWare is very particular about what it considers to be a real hardware RAID controller and it refused to recognize the onboard “software” RAID controller of the ASRock motherboard. It would only see the disks as individual volumes. After consulting with the VMWare compatibility list and doing some eBay researched, I ordered a used LSI MegaRAID 9260 controller for $119 as well as a 3WARE multi-lane SFF-8087 to SATA III cable setup to connect the Western Digital storage drives to the controller. After installing the LSI card, VMWare was happy and I was able to create a single 2 TB storage volume using RAID 0 striping.

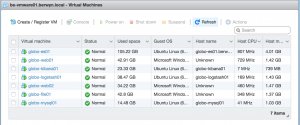

Since adding the LSI MegaRAID card I haven’t had any hardware compatibilities problems at all and the system has been running great. I can run all the virtual machines at the same time with pretty good performance. The VMWare client tools install automatically in Windows VMs and for Linux, I use the open-vm-tools package that is available in most modern distributions.

My costs are broken out below. As you can see, I went a little over my $1000 budget which was mostly blown by having to add the extra hardware RAID card but in the end it was worth it. Being able to create as many local VMs on my home network as I wanted while creating my Elastic Stack course was invaluable. You could certainly save a good chunk of money by going with a single Xeon CPU and single socket motherboard. You’d only get 16 logical cores that way but that’s still not a bad rig.

Cost Breakdown:

- ASRock dual socket motherboard: $293

- 64 GB DDR3 RAM (used): $106

- (2) Intel Xeon E5-2670 CPUs: $169

- LSI MegaRAID controller (used): $119

- EVGA 750w power supply: $100

- (2) Cooler Master CPU fan / heatsinks: $46

- (2) Western Digital 7200 RPM 1TB drives: $100

- Phanteks Enthoo Pro full tower case: $100

- Asus DVDRW drive: $21

- KingSpec 64 GB SSD: $27

- Pack of 5 SATA III cables: $8

- 3Ware RAID card cables: $15

- TOTAL: $1105

Nice guide! Thanks. Building a Linux box with a 8700K and z370. My main rig is a Skylake-X. Want to build a FreeNAS to join my QNAPs, and Synology NAS boxes. However, for my home lab, and for running some services like possibly DNS, VPN, and a SCM repo, I want to get a sever. Thought about maybe an HP micro server or refurb 1U rackmount, but I like building my machines. However, haven’t been paying much attention to the Xeons or server chipsets. It is quite a nebulous area, and you get into compatbility issues, etc. I want to make sure I can install an Unbuntu Sever image, and MS Server 2016 as well as a Hypervisor. You mentioned issues with VMware ESXi and the RAID controller in your post, so wanted to make sure whatever parts I get and build will be able to do what I need it. There are always concerns and things you have to anticipate. Thanks again, it was helpful.

I’m glad you liked it!

When I go looking for those CPU’s and MB they are about 30% higher in cost then you posted the CPU is around $119 each, and the asrock MB is almost $400 supermirco’s for that CPU are about $380 Strange that prices went up over time.

Jeez that’s a pain. I haven’t checked on the prices since building this system but I know they can be a bit volatile.

I know I would have thought they would be getting cheaper as time passes but I think there is a rise in numbers of server building for home use the E-2670 CPU is great CPU for the price the lack of Duel CPU mother boards that support it push the price well above $300. I have to just build a single CPU 8 core version I’m only using it to study for networking certs.

That’s a good idea. The market _DOES_ seem to fluctuate a lot. GPUs are super expensive right now on the used market because of crypto-currency mining.

Great Project JP….have you calculated the amount of electricity your rig uses?

I haven’t, actually but I don’t think it was too much. The power supply is 750w max so most of the time it’s not pulling that kind of load while the VMs sit idle.

Bonjour JPtoto,

Thanks for all your indications. I bought the same stuff and will start the building soon(after my trip to LA to fetch the items, I live in France and this server will be just a home one).

Could you give me your recommendations on allocating cores,vcpus, ram to my few VMs?

( 2*8cores CPUs,64gb Ram)

CoreCPU RAM (Gb) OS disk (Gb) Data (Tb) Data (Tb)

Host 1 4 50

Guests

Jeedom Debian9,home automation 1 4 50 0,5

Sinology Serveur video, audio, 2 8 50 2,5 4

Windows10Prod Mysql+php 2 8 50 0,5

Windows10Test audio & video tools 2 8 50 1

Zoneminder Surveillance 2 4 50 2

Total 10 36 3800,0 10,5

Also, I have 120gb ssd disk. Would you recommend using it for the esxi host+some swap or just use the two 4TB in Raid to support host + Guest VM OS?

Thanks

I would recommend using the SSD for the OS install. I also created a volume on my SSD for keeping ISO files and some storage to make good use of that space.

For your VMs, I don’t think Zoneminder will use too many resources.

For Windows 10 mysql/php you will probably want at least 4gb of RAM, 2 or 4 CPUs

For Windows 10 audio and video, probably more RAM and CPUs but you should try different configurations to see what works best.

Debian for home automation probably won’t need much. 2 CPUs and 2 or 4 GB RAM.

I would save as many CPUs and RAM as possible for video editing/processing because that will be the heaviest user. Good luck!

Thanks Jptoto, I am back from the California with all the components and built the system. I have 2 other questions:

– How do you create a second volume on the booting SSD and have a boot exsi volume and another one for stocking your ISO?

– I Wonder why not have on a SSD a esxi booting volume and another 2or 3 for having the guest VM Os and their data on Raid hdd disks?

What do you think ?

Philippe

Pingback: Design Limbo » Why I’m building a Custom Development Home Server

Pingback: Design Limbo » The Build of my Development VMDocker Server

Nice Article, I purchased the LSI MegaRAID 9260-8i 8-port card and FreeNAS will not see the disk’s + the card does not support IT mode, anyways I can created the RAID in the card then created a datastore. my advice is you are going use FreeNAs go with the LS9211

Pingback: HP ML350p gen8 – CPU Upgrade – VMware – Mags Forum Technology

Pingback: HP ML350p gen8 – CPU Upgrade – VMware – Mags Forum Technology

I am looking at building a system similar to this for a small business. Would this system successfully run 8 – 10 VMs simultaneously?

VM OS – Windows 10 Pro

VM RAM – 4 gb

VM HDD – 120 gb

I think it would, yes, but I’d get the fastest disks you can afford. One downside I’ve noticed for my build is that since I’m using extremely generic/cheap HDDs, the performance, especially on windows, is pretty slow. If you’re trying to decide where to put your money I’d put it in disks.

Great Post! Thanks. Can I use the Supermicro X9DRi-F E-ATX Motherboard System Board Dual Socket 2011 instead? Thanks

Joe, Thanks for reading! I don’t see why not? That board supports the LGA2011 socket so it it should work just fine.